Fiddling around with Erlang. Wanted to determine the most efficient collection datastructure for membership testing.

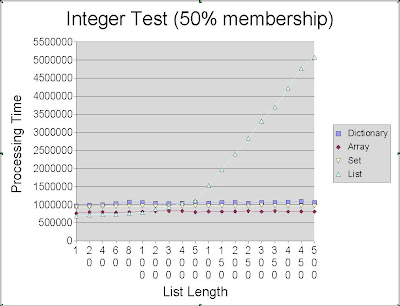

The graph above are the results of that testing. In this test I used integers, although my actual problem does not use integers it did allow me to include the array module in the test.

We can see that using native lists is the faster for small lists. In particular, it is faster to use lists for up to 300 members if your searches are expecting 100% membership rate. Clearly this does not obviously look like a winner, but using lists instead of dicts or arrays could be a good choice for performant mapping. Somthing that was left out for the array test was the fixed size array, this could probably change the performance quite a bit.

Please note that the X-axis is not regularly spaced. I whipped that graph up in open-office calc and its charting capabilities are a bit weak. That sudden climb of the list based solution is not due to some weird threshold but because the test suddenly jumps from incrementing by 100 to incrementing by 500. Oh and the processing time is in milliseconds :)

Here is the Erlang code used for that test.

integer_test(Max) ->

Iterations = 500000,

Number_List = lists:seq(1,Max),

Degree_Of_Error = 1,

Self_Dict = fun(Number,Number_Dict) ->

dict:store(Number,Number,Number_Dict)

end,

Test_Dict = lists:foldl(Self_Dict,dict:new(),Number_List),

Self_Array = fun(Number,Number_Array) ->

array:set(Number,Number,Number_Array)

end,

Test_Array = lists:foldl(Self_Array,array:new(),Number_List),

Make_Set = fun(Number,Number_Set) ->

sets:add_element(Number,Number_Set)

end,

Test_Set = lists:foldl(Make_Set,sets:new(),Number_List),

Access_Dict = fun() ->

Number = random:uniform(Max*Degree_Of_Error),

dict:find(Number,Test_Dict)

end,

{Time_Dict,_Return_Dict} = timer:tc(util,do_n_times,[Access_Dict,Iterations]),

Access_Array = fun() ->

Number = random:uniform(Max*Degree_Of_Error),

array:get(Number,Test_Array)

end,

{Time_Array,_Return_Array} = timer:tc(util,do_n_times,[Access_Array,Iterations]),

Access_Set = fun() ->

Number = random:uniform(Max*Degree_Of_Error),

sets:is_element(Number,Test_Set)

end,

{Time_Set,_Return_Set} = timer:tc(util,do_n_times,[Access_Set,Iterations]),

Access_List = fun() ->

Number = random:uniform(Max*Degree_Of_Error),

lists:member(Number,Number_List)

end,

{Time_List,_Return_List} = timer:tc(util,do_n_times,[Access_List,Iterations]),

{Time_Dict,Time_Array,Time_Set,Time_List}.

***UPDATE***

I had some odd results trying to apply this to my sat solver. I managed to track down the reason. lists:member/2 is a BIF. So it has some fast as C implementation. If you try to recreate this test writing your own Erlang member function the behaviour is *very* different.